Thursday, April 10, 2014

what was the legal issue again? dickishness takes on a life of its own...,

By

CNu

at

April 10, 2014

0

comments

![]()

Labels: 2parties1ideology , Livestock Management , partisan , political theatre

parliamentary theatrical dickishness signifying nothing...,

By

CNu

at

April 10, 2014

0

comments

![]()

Labels: 2parties1ideology , political theatre

prologue...,

"You don't know what the FBI did. You don't know what the FBI's interaction was with the Russians. You don't know what questions were put to the Russians, whether those questions were responded to. You simply do not know that. And you have characterized the FBI as being not thorough, or taken exception to my characterization of them as being thorough. I know what the FBI did. You cannot know what I know. That is all."

With that, Louie grows furious. This is where the clip starts, as Louie has literally turned red. He wants the AG's head but -- NO! -- Louie's time has expired. So he attempts to counter-attack Holder using a parliamentary procedure, the "Point of Personal Privilege." Unfortunately the "Privilege" can't be used to act a Texas derpass. But Louie doesn't know it, or doesn't care, so on he blindly rants while the committee members ask him repeatedly to shut it. Finally, after growing fully crimson and unhinged, he blurts out the most magnificent defense of a dumb-dog congressman ever:

"HE TURN AND CAST ASPERSIONS ON MY ASPARAGUS."

By

CNu

at

April 10, 2014

0

comments

![]()

Labels: 2parties1ideology , political theatre

Wednesday, April 09, 2014

without femtoaggressions to give it value, purpose, and meaning, the cathedral will implode

theatlantic | The study that might have put to rest much of the recent agitation about microaggressions has unfortunately never been published. Microaggressions, for those who are not up on the recent twists and turns of American public discourse, are the subtle prejudices found even in the most liberal parts of our polity. They are revealed when a lecturer cites mainly male sources and no gay ones, when we use terms such as “mankind,” or when we discuss what Michelle Obama wore when she visited pandas in China, something we would not note about a man. In short, they are the current obsession of political correctness squads.

By

CNu

at

April 09, 2014

3

comments

![]()

Labels: Cathedral , institutional deconstruction

der Narzißmus der kleinen Differenzen

By

CNu

at

April 09, 2014

0

comments

![]()

Labels: as above-so below , Cathedral , What IT DO Shawty...

Tuesday, April 08, 2014

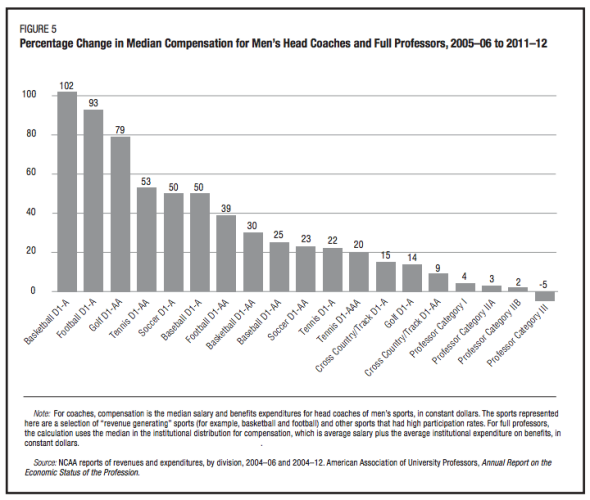

college coach pay...,

By

CNu

at

April 08, 2014

23

comments

![]()

Labels: conspicuous consumption , Peak Capitalism , reality casualties

that's why you steal nutella out the cafeteria and keep you some hot pockets...,

By

CNu

at

April 08, 2014

0

comments

![]()

Labels: edumackation , hustle-hard

the myth of working your way through college...,

This is interesting. A credit hour in 1979 at MSU was 24.50, adjusted for inflation that is 79.23 in today dollars. One credit hour today costs 428.75.

By

CNu

at

April 08, 2014

0

comments

![]()

Labels: Collapse Casualties , debt slavery , edumackation

if college is only exploratory and family connections ensure future job prospects...,

By

CNu

at

April 08, 2014

0

comments

![]()

Labels: Collapse Casualties , edumackation

Monday, April 07, 2014

Bro.Feed Not Scurred, Politically Correct, or Intellectually Consti(err...., nevermind.)

whatever you think of cultural non-judgementalism in the abstract, what it has meant in reality is that the pathologies of lower-class black culture have been played out on other black folks and on non-black neighbors.

REPLACE IT WITH MEASUREMENT!!!!!

GIVE The "Black Racial Services Machine" and the "Post-Racial Progressive Fundamentalist Alliance" - both of whom have FUSED The "Americanized Negro's "Black Agenda" with PROGRESSIVE POLITICAL (Only) Expression THESE MANDATES!!!!

1) INCREASE the Black graduation rate to the point at which the new market of TECHNICALLY EDUCATED BLACKS can be a DISRUPTIVE MARKET FORCE in the key areas within the Black Community Marketplace (Health Care, Business Management, etc)

2) LOWER THE BLACK HOMICIDE VICTIMIZATION RATE from 47% down to 25% IN 5 YEARS, moving it closer to our 14% POPULATION PROPORTION

3) GROW THE LOCAL BLACK ECONOMY BY 50% IN 5 YEARS, where its ability to CREATE JOBS, maintain LOCAL TAX BASE, service the retail and service needs of the people in a 4 mile radius

4) REDUCE THE NUMBER OF 'OUT OF RESIDENCE BLACK FATHERS' DOWN TO 20% - as the Black Community acknowledges the critical importance of having a BLACK MAN AND WOMAN working collaboratively to care for their children. RECHRISTINE "The Black Church" from its claim to having been created in response to WHITE SUPREMACY (what Rev Raphael Warnock of Ebenezer Church in Atlanta said) OVER TO the CENTERPIECE OF THE BLACK COMMUNITY SOCIAL SERVICES STRUCTURE

CNu - IF the AMERICANIZED NEGRO'S "Struggle Motion" is not REMOVED FROM STRAIGHT UP POLITICAL OPPORTUNISM these CORRUPT NEGROES on MSNBC will be allowed to retain their platform and MOLEST THE AMERICANIZED NEGRO'S AGENDA - for ONLY the benefit of PROGRESSIVISM - the condition of the Negro BE DAMNED.

In the absence of Jim Crow, America has organically resegregated itself with a vengeance - with managerial and professional class blacks spatially and socially distancing themselves from lower-class black cultural pathology.

Do YOU believe that Michelle Alexander believes that the presence of AG Eric Holder, his boss Barack Obama and a DISTRICT ATTORNEY and SHERIFF (jailer) and POLICE CHIEF who was appointed by a MAYOR - ALL OF WHOM BLACK VOTED FOR means that THE NEW JIM CROW IS DEAD?

The one thing that I learn from:

* Reading "The Final Call"

* Listening to "Black Progressive Radical Radio"

* Watching Videos ...................IS..............

THERE IS NO CORRELATION between the PRESENCE OF ESTABLISHMENT FORCES THAT THE AMERICANIZED NEGRO VOTED FOR BEING IN POWER and the RHETORIC that the RADICAL REVOLUTIONARY NEGROES will tell their CONGREGATION is still out there LURKING, hoping to cut every Black person's throats IF we don't remain UNIFIED.

The final irony is - that Ras Baraka - son of Amari Baraka is poised to become Mayor Of Newark.

Amari Baraka told of the day that the last White mayor of Newark pulled him out of jail and brought him to the mayors OFFICE where he was BEATEN-UP ON THE FLOOR.

The REVOLUTIONARY /RADICALS have ultimately become POLITICAL CANDIDATES (see Cheway Lumumba).

And now the biggest threat facing Ras Baraka is that HIS CAMPAIGN BUS WAS BURNED by an operative from his BLACK DEMOCRATIC opponent.

UNTIL BLACK PEOPLE REGULATE THE ACCESS THAT POLITICS HAVE TO THEIR "CONSCIOUSNESS ABOUT THEMSELVES" - THE TRUTH THAT "YOU DON'T HAVE TO DEVELOP THE NEGRO THROUGH POLITICS" - YOU ONLY NEED TO KEEP HIS ATTENTION ALIGNED WITH 'YOUR TEAM' IS ALL THAT MATTERS FOR YOU TO HAVE SUCCESS AT HIS EXPENSE.

By

CNu

at

April 07, 2014

0

comments

![]()

Labels: A Kneegrow Said It

the answers ARE knowable, but not if you consult the politically correct and intellectually constipated academy

What I am getting from Coates’ argument (and I don’t think Chait is picking up on) is that he believes that white middle class norms and poor black norms are different but equal cultural norms. Assuming that middle class norms are superior to black lower-class norms is therefore a value judgement. Suggesting that lower-class black people throw aside the norms that are ‘theirs’ to take up white middle class norms is therefore an act of white supremacy, an imposition of ‘foreign’ norms on the weaker party.

This is a novel argument but not really a politically practical one. Come to think of it, it's not only impractical, I’d say it was politically self destructive since whatever you think of cultural non-judgementalism in the abstract, what it has meant in reality is that the pathologies of lower-class black culture have been played out on other black folks and on non-black neighbors. In the absence of Jim Crow, America has organically resegregated itself with a vengeance - with managerial and professional class blacks spatially and socially distancing themselves from lower-class black cultural pathology.

The turd in the "can't we all just get along" punch bowl is, that, no one with common sense is able to ignore the very high rate at which lower-class blacks victimize themselves and others. It's not simply a matter of rudeness, incivility, and lack of regard for the commons. If one wanted to be generous about pathological lower class black culture, one could say that the "warrior spirit" has the ratchets "going for theirs". One might say that about the lower class ratchets. One might even romanticize the ratchets as 21st century pirates. But then, with regard to pirates and pirate culture, the answers to those questions are historically knowable. As we now know, pirates had far more common sense and practical good judgement when it came to committing acts of irrational violence and incivility within their own ranks...,

By

CNu

at

April 07, 2014

55

comments

![]()

Labels: A Kneegrow Said It , Ass Clownery

irrational violence of individuals against each other is detrimental to the profitable enterprise

By

CNu

at

April 07, 2014

2

comments

![]()

the steadily increasing probability of death camps...,

By

CNu

at

April 07, 2014

0

comments

![]()

Labels: Collapse Casualties , cull-tech , What Now?

Sunday, April 06, 2014

the cultural cognition project

Related video: lecture on the cultural cognition of risk

By

CNu

at

April 06, 2014

1 comments

![]()

Labels: quorum sensing? , stigmergy , What IT DO Shawty...

Saturday, April 05, 2014

Great Debate Transcending Our Origins: Violence, Humanity, and the Future

By

CNu

at

April 05, 2014

10

comments

![]()

Labels: school

Friday, April 04, 2014

inclusive fitness 50 years on...,

where –c is the impact that the trait has on the individual's own reproductive success, bi is its impact on the reproductive success of the individual's ith social partner and ri is the genetic relatedness of the two individuals. This mathematical partition of fitness effects underpins the kin selection

approach to evolutionary biology [8].

The general principle is that with regards to social behaviours,

natural selection is mediated by any positive or negative

consequences for recipients, according to their

genetic relatedness to the actor. Consequently, individuals should show

greater

selfish restraint, and can even behave

altruistically, when interacting with closer relatives [4].

where –c is the impact that the trait has on the individual's own reproductive success, bi is its impact on the reproductive success of the individual's ith social partner and ri is the genetic relatedness of the two individuals. This mathematical partition of fitness effects underpins the kin selection

approach to evolutionary biology [8].

The general principle is that with regards to social behaviours,

natural selection is mediated by any positive or negative

consequences for recipients, according to their

genetic relatedness to the actor. Consequently, individuals should show

greater

selfish restraint, and can even behave

altruistically, when interacting with closer relatives [4].

By

CNu

at

April 04, 2014

31

comments

![]()

Labels: ethology , Genetic Omni Determinism GOD , stigmergy , tactical evolution

microbial genes, brain & behaviour – epigenetic regulation of the gut–brain axis

By

CNu

at

April 04, 2014

0

comments

![]()

Labels: Genetic Omni Determinism GOD , microcosmos , symbiosis , What IT DO Shawty...

bringing pseudo-science into the science classroom

By

CNu

at

April 04, 2014

0

comments

![]()

Labels: tactical evolution , The Hardline , truth

Thursday, April 03, 2014

the dawn of monotheism revisited

- "Like the

accounts of the historian Manetho, the Talmudic stories contain many distortions

and accretions arising from the fact that they were transmitted orally

for a long time before finally being set down in writing. Yet one can sense

that behind the myths there must have lain genuine historical events that

had been suppressed from the official accounts of both Egypt and Israel,

but had survived in the memories of the generations" (p.24). "The Alexandrian

Jews were naturally interested in Manetho's account of their historic links

with Egypt, although they found some aspects of it objectionable. His original

work therefore did not survive for long before being tampered with [2/3

of Zarathushtra's Avesta reportedly was even

deliberately destroyed]" (p.27). And: "Yoyotte ... became one of the few

to see through the 'embellishments' of the biblical account and identify

the historical core of the story ..." (p.48).

- "... the Koran presents

the confrontation in such a precise way that one wonders if some of the

details were left out of the biblical account deliberately. Here Moses

sounds less like a magician, more like someone who presents evidence of

his authority that convinces the wise men of Egypt, who throw themselves

at his feet and thus earn the punishment of [an imposter] Pharaoh. One

can only suspect that the biblical editor exercised care to avoid any Egyptian

involvement with the Israelite Exodus, even to the extent of replacing

Moses by Aaron in the performance of the rituals. ... [During] their sed

festival

celebrations, Egyptian kings performed rituals that correspond to the 'serpent

rod' and 'hand' rituals performed by Moses - and, in performing them, Moses

was not using magic but seeking to establish his royal authority.

I think the correct interpretation of these accounts [of the Bible and the Koran] is that, when Akhenaten was forced to abdicate, he must have taken his royal sceptre to Sinai with him. On the death of Horemheb, the last king of the Eighteenth Dynasty, about a quarter of a century later, he must have seen an opportunity to restore himself to the throne. No heir to the Tuthmosside kings existed and it was Pa-Ramses, commander of Horemheb's army and governor of Zarw, who had claim to the throne. Akhenaten returned to Egypt and the wise men were gathered in order to decide between him and Pa-Ramses. Once they saw the sceptre of royal authority and Akhenaten had performed the sed festival rituals - secret from ordinary citizens - the wise men bowed the knee in front of him, confirming that his was the superior right to the throne, but Pa-Ramses used his army to crush the rebels. Moses was allowed to leave again for Sinai, however, accompanied by the Israelites, his mother's relatives, and the few Egyptians who had been converted to the new [monotheistic] religion that he had attempted to force upon Egypt a quarter of a century earlier. In Sinai the followers of Akhenaten were joined subsequently by some bedouin tribes (the Shasu), who are to be identified as the Midianites of the Bible. No magic was performed, or intended, by Moses. The true explanation of the biblical story could only be that it was relating the polical challenge for power in a mythological way - and all the plagues of which we read were natural, seasonal events in Egypt in the course of every year. ..." (p.178f)

"This would explain how a new version of the Osiris-Horus myth came into existence from the time of the Nineteenth Dynasty. Osiris, the King of Egypt, was said to have had to leave the country for a long time. On his eventual return he was assassinated by Set, who had usurped the throne, but Horus, the son of Osiris, confronted Set at Zarw and slew him. According to my interpretation of events, it was in fact 'Set' who slew 'Horus'; but their roles were later reversed by those who wished to believe in an eternal life for Horus [alternatively, if their roles were not reversed, that might support the idea that Moses/Akhenaton had a role to play in Canaan/Palestine in the post-exodus period]. This new myth developed to the point where Osiris/Horus became the principal god worshipped in Egypt in later times while Set was looked upon as the evil one. This myth could have been a popular reflection of a real historical event - a confrontation between Moses and Seti I on top of the mountain in Moab." (p.187f)

- "No primary

source of information on Moses exists outside the Bible. ... [In] the Haggadah's

hymnic confession Dayyeinu, ... Israel's career from Egypt to the settlement

is rehearsed in 13 stages without a reference to Moses. ... According to

Artapanos, Moses ... was the first pilosopher, and invented a variety of

machines for peace and war. He was also responsible for the political organization

of Egypt (having divided the land into 36 nomes) ... According to Josephus,

Moses was the most ancient of all legislators in the records of the world.

Indeed, he maintains that the very word 'law' was unknown in ancient Greece

(Jos., Apion 2:154). ...

Hecataeus of Abdera presented Moses as the founder of the Jewish state, ascribing to him the conquest of Palestine and the building of Jerusalem and the Temple. He explained, in the Platonic manner, that Moses divided his people into 12 tribes, because 12 is a perfect number, corresponding to the number of months in the year (cf. Plato, Laws, 745b-d; Republic, 546b). ... Very curious is the legend recorded by Israel Lipschuetz b. Gedaliah (Tiferet Yisrael to Kid. end, n.77). A certain King, having heard of Moses' fame, sent a renowned painter to portray Moses' features.

On the painter's return with the portrait the king showed it to his sages, who unanimously proclaimed that the features portrayed were those of a degenerate [4]. The astonished king journeyed to the camp of Moses and observed for himself that the portrait did not lie. Moses admitted that the sages were right and that he had been given from birth many evil traits of character but that he had held them under control and succeeded in conquering them. This, the narrative concludes, was Moses' greatness, that, in spite of his tremendous handicaps, he managed to become the man of God. Various attempts have, in fact, been made by some rabbis to ban the further publication of this legend as a denigration of Moses' character."

By

CNu

at

April 03, 2014

17

comments

![]()

Labels: History's Mysteries , waaay back machine

publicly funded schools where creationism is taught...,

By

CNu

at

April 03, 2014

0

comments

![]()

Labels: de-evolution , the wattles , theoconservatism

Wednesday, April 02, 2014

believe it or not: a narrative antidote to daystarism....,

By

CNu

at

April 02, 2014

21

comments

![]()

Labels: narrative , People Centric Leadership

daystar?

By

CNu

at

April 02, 2014

0

comments

![]()

Labels: deceiver , status-seeking

Tuesday, April 01, 2014

global system of conehead supremacy?

By

CNu

at

April 01, 2014

26

comments

![]()

Labels: Genetic Omni Determinism GOD , high strangeness , History's Mysteries

those big heads though...,

By

CNu

at

April 01, 2014

0

comments

![]()

Labels: high strangeness , History's Mysteries

dna analysis of paraca skulls unknown to any human, primate, or animal...,

By

CNu

at

April 01, 2014

0

comments

![]()

Labels: high strangeness , History's Mysteries

moses and akhenaton

By

CNu

at

April 01, 2014

0

comments

![]()

Labels: ancient , high strangeness , History's Mysteries

CIA Showed The House Speaker Its Pictures Of His Little Johnson.....,

davidstockman | What Johnson’s impending Waterloo means, therefore, is not merely the prospect of another wild and wooly succession bat...

-

theatlantic | The Ku Klux Klan, Ronald Reagan, and, for most of its history, the NRA all worked to control guns. The Founding Fathers...

-

Video - John Marco Allegro in an interview with Van Kooten & De Bie. TSMATC | Describing the growth of the mushroom ( boletos), P...

-

Farmer Scrub | We've just completed one full year of weighing and recording everything we harvest from the yard. I've uploaded a s...